Upgrading an ESX 3.5 U4 to vSphere ESX 4.0i U1 I noticed a very strange behaviour.

In my environment, the upgrade task, requires to reinstall ESXi from scratch then replicate the previous configuration using a custom made powershell script.

The ESXi install phase, normally so fast, took a huge amount of time. That forced me to have the server reinstalled again to watch carefully at logs.

That's what I've found:

CLUE #1

On the installation LUN selection screen, from which you chose the LUN holding the hypervisor, appears a "strange" empty DISK 0 with 0 byte size (see figure 1-1)

figure 1-1

CLUE #2

Pressing ALT-F12 on the server console, to switch to VMKernel log screen, reveals a huge number of following warning messages:

Jan 18 10:19:44 vmkernel: 44:22:15:55.304 cpu3:5453)NMP: nmp_CompleteCommandForPath: Command 0x12 (0x410007063440) to NMP device "mpx.vmhba2:C0:T2:L0" failed on physical path "vmhba2:C0:T2:L0" H:0x0 D:0x2 P:0x0 Valid sense data: 0x5 0x25 0x0.

Jan 18 10:19:44 vmkernel: 44:22:15:55.304 cpu3:5453)WARNING: NMP: nmp_DeviceRetryCommand: Device "mpx.vmhba2:C0:T2:L0": awaiting fast path state update for failover with I/O blocked. No prior reservation exists on the device.

Jan 18 10:19:45 vmkernel: 44:22:15:56.134 cpu6:4363)WARNING: NMP: nmp_DeviceAttemptFailover: Retry world failover device "mpx.vmhba2:C0:T2:L0" - issuing command 0x410007063440

Jan 18 10:19:45 vmkernel: 44:22:15:56.134 cpu3:41608)WARNING: NMP: nmp_CompleteRetryForPath: Retry command 0x12 (0x410007063440) to NMP device "mpx.vmhba2:C0:T2:L0" failed on physical path "vmhba2:C0:T2:L0" H:0x0 D:0x2 P:0x0 Valid sense data: 0x5 0x2

Jan 18 10:19:45 5 0x0.

Jan 18 10:19:45 vmkernel: 44:22:15:56.134 cpu3:41608)WARNING: NMP: nmp_CompleteRetryForPath: Logical device "mpx.vmhba2:C0:T2:L0": awaiting fast path state update before retrying failed command again...

Jan 18 10:19:46 vmkernel: 44:22:15:57.134 cpu5:4363)WARNING: NMP: nmp_DeviceAttemptFailover: Retry world failover device "mpx.vmhba2:C0:T2:L0" - issuing command 0x410007063440

Jan 18 10:19:46 vmkernel: 44:22:15:57.134 cpu3:41608)WARNING: NMP: nmp_CompleteRetryForPath: Retry command 0x12 (0x410007063440) to NMP device "mpx.vmhba2:C0:T2:L0" failed on physical path "vmhba2:C0:T2:L0" H:0x0 D:0x2 P:0x0 Valid sense data: 0x5 0x2

You don't need to be a vmkernel storage engineer to correlate cause and effect.

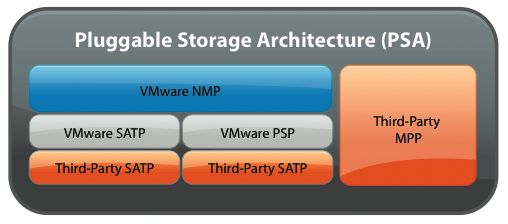

The new vmware storage architecture (PSA) behaves differently from ESX 3.5. During the initial storage scan it finds a "virtual" disk0 device exposed by my storage virtualization appliance (FALCONSTOR NSS) mapped to ESX as LUN 0 device, and it pretends to handle that as all other "real" SAN devices.

This generates a lot of errors and retries, slowing down the boot phase and the vmkernel every time you rescan a storage path again.

The output provided by the following esxcli command, confirms the suspects:

# esxcli --server $HOST --username $USER --password $PASSWD nmp device list

mpx.vmhba3:C0:T0:L0

Device Display Name: Local VMware Disk (mpx.vmhba3:C0:T0:L0)

Storage Array Type: VMW_SATP_LOCAL

Storage Array Type Device Config:

Path Selection Policy: VMW_PSP_FIXED

Path Selection Policy Device Config: {preferred=vmhba3:C0:T0:L0;current=vmhba3:C0:T0:L0}

Working Paths: vmhba3:C0:T0:L0

eui.000b080080002001

Device Display Name: Pillar Fibre Channel Disk (eui.000b080080002001)

Storage Array Type: VMW_SATP_DEFAULT_AA

Storage Array Type Device Config:

Path Selection Policy: VMW_PSP_FIXED

Path Selection Policy Device Config: {preferred=vmhba2:C0:T3:L63;current=vmhba2:C0:T3:L63}

Working Paths: vmhba2:C0:T3:L63

eui.000b08008a002000

Device Display Name: Pillar Fibre Channel Disk (eui.000b08008a002000)

Storage Array Type: VMW_SATP_DEFAULT_AA

Storage Array Type Device Config:

Path Selection Policy: VMW_PSP_FIXED

Path Selection Policy Device Config: {preferred=vmhba2:C0:T1:L60;current=vmhba2:C0:T1:L60}

Working Paths: vmhba2:C0:T1:L60

mpx.vmhba2:C0:T2:L0

Device Display Name: FALCON Fibre Channel Disk (mpx.vmhba2:C0:T2:L0)

Storage Array Type: VMW_SATP_DEFAULT_AA

Storage Array Type Device Config:

Path Selection Policy: VMW_PSP_FIXED

Path Selection Policy Device Config: {preferred=vmhba2:C0:T2:L0;current=vmhba2:C0:T2:L0}

Working Paths: vmhba2:C0:T2:L0

mpx.vmhba0:C0:T0:L0

Device Display Name: Local Optiarc CD-ROM (mpx.vmhba0:C0:T0:L0)

Storage Array Type: VMW_SATP_LOCAL

Storage Array Type Device Config:

Path Selection Policy: VMW_PSP_FIXED

Path Selection Policy Device Config: {preferred=vmhba0:C0:T0:L0;current=vmhba0:C0:T0:L0}

Working Paths: vmhba0:C0:T0:L0

naa.6000d77800005acc528d69135fbc1c44

Device Display Name: FALCON Fibre Channel Disk (naa.6000d77800005acc528d69135fbc1c44)

Storage Array Type: VMW_SATP_DEFAULT_AA

Storage Array Type Device Config:

Path Selection Policy: VMW_PSP_RR

Path Selection Policy Device Config: {policy=rr,iops=1000,bytes=10485760,useANO=0;lastPathIndex=0: NumIOsPending=0,numBytesPending=0}

Working Paths: vmhba1:C0:T2:L68, vmhba2:C0:T2:L68

naa.6000d77800008c5576716bd63f8f9901

Device Display Name: FALCON Fibre Channel Disk (naa.6000d77800008c5576716bd63f8f9901)

Storage Array Type: VMW_SATP_DEFAULT_AA

Storage Array Type Device Config:

Path Selection Policy: VMW_PSP_RR

Path Selection Policy Device Config: {policy=rr,iops=1000,bytes=10485760,useANO=0;lastPathIndex=1: NumIOsPending=0,numBytesPending=0}

Working Paths: vmhba1:C0:T2:L3, vmhba2:C0:T2:L3

Watching carefully through the output you should see mpx.vmhba2 and mpx.vmhba3 referring to a runtime name somewhat different compared to the more traditional naa. and eui. shown for the other paths (to have a clear idea about vmware disk identifiers see the Identifying disks when working with VMware ESX KB article).

I don't know why Falconstor IPStor NSS is exposing those fake LUNs (I'll open s SR), probably this is related to the fact that I don't map, for an internal standard, any LUN number 0 to my ESX servers. Mapping a LUN 0 will hide for sure the issue.

Anyway, I've found another workaround.

The following script add two new claim rules that MASK (hide) all the fake LUN 0 paths using the usual esxcli command line:

# esxcli --server $HOST --username $USER --password $PASSWD corestorage claimrule add -P MASK_PATH -r 109 -t location -A vmhba2 -C 0 -T 2 -L 0

# esxcli --server $HOST --username $USER --password $PASSWD corestorage claimrule add -P MASK_PATH -r 110 -t location -A vmhba3 -C 0 -T 0 -L 0

to check the result type the corestorage claimrule list command

# esxcli --server $HOST --username $USER --password $PASSWD corestorage claimrule list

Rule Class Type Plugin Matches

---- ----- ---- ------ -------

0 runtime transport NMP transport=usb

1 runtime transport NMP transport=sata

2 runtime transport NMP transport=ide

3 runtime transport NMP transport=block

4 runtime transport NMP transport=unknown

101 runtime vendor MASK_PATH vendor=DELL model=Universal Xport

101 file vendor MASK_PATH vendor=DELL model=Universal Xport

109 runtime location MASK_PATH adapter=vmhba1 channel=0 target=0 lun=0

109 file location MASK_PATH adapter=vmhba1 channel=0 target=0 lun=0

110 runtime location MASK_PATH adapter=vmhba2 channel=0 target=0 lun=0

110 file location MASK_PATH adapter=vmhba2 channel=0 target=0 lun=0

65535 runtime vendor NMP vendor=* model=*

The correct (new) Rule number (if you start from number 102 will be ok)

The correct location (vmhba number followed by Channel (C) : Target (T) : Lun (L) corresponding to the fake path)

and then reboot the ESX host.